Compiler usage complexity across time : initial analysis for #GCC

During a lesson preparation I asked myself how #compiler complexity evolved across time. I did a small experiment to try to find metrics about complexity. A first idea is to look at the number of lines of code, which could be difficult to count because a compiler distribution could contain a lot of different files (.c .h .cpp, .md, configurations, docs, etc).

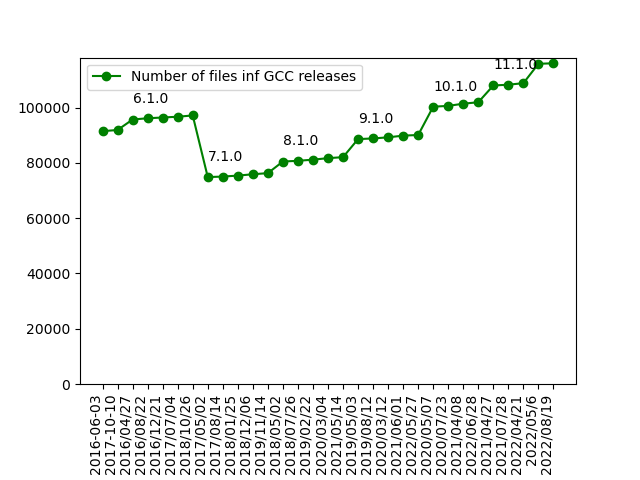

So I started to count only the number of files of #gcc distributions from 5.1 up to 12.2. The most recent GCC release contains 116008 files ! It starts to be a respectable distribution size. Let see how it evolves in time :

Gcc file number evolution across release

We can see that’s a constant evolution except for the 7.1.0 release were the GCJ support was dropped. (https://en.wikipedia.org/wiki/GNU_Compiler_Collection#Supported_languages)

Since I’m focused on software / hardware interaction, I’ve also looked at two specific evolutions: how many gcc switches exist for machine dependent optimizations (aka -mXXX) and how many optimization switches (aka -fXXX). The last gcc release (12.2) contains 853 specific -f switches and 226 -m switches.

Both evolution are quite similar in terms of speed, but the number of specific switch for target dependent is, in my humble opinion, a significant reflect of the hardware complexity evolution.

Experimental setup : download all gcc releases from 5.1 to 12.2, build it, count the number of file of each release, build each release, run gcc -v --help and count how many options starts with ‘-f’ and ‘-m’