A 35.6TOPS/W/mm² 3-Stage Pipelined Computational SRAM with Adjustable Form Factor for Highly Data-Centric Applications

I’m very proud to collaborate with chip designers and even more with memory designers because modern applications are memory bounded.

I’m very proud to collaborate with chip designers and even more with memory designers because modern applications are memory bounded.

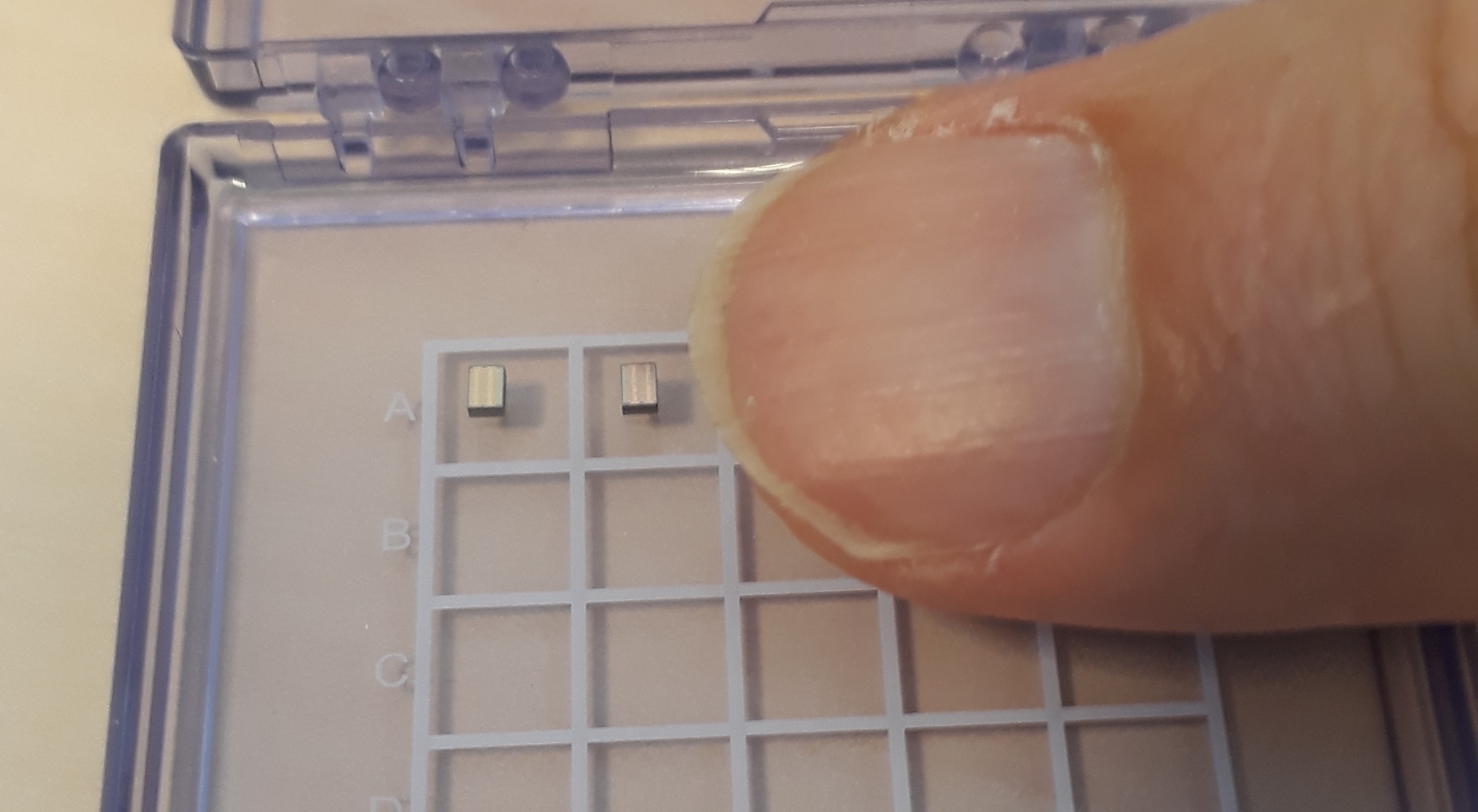

Memory chips are very complex to design. Since 2016, we have a project which give bith to a memory circuit illustrated in this photo.

My colleagues are smart enough to give me the 2 not functional circuits of the batch for illustrative purpose. Details of the circuit and a methodology are illustrated in this article:

- The preprint is available on HAL: https://hal-cea.archives-ouvertes.fr/cea-02904882

- The final version will be available on IEEEhttps://doi.org/10.1109/LSSC.2020.3010377

Here is the article abstract.

Abstract : —In the context of highly data-centric applications, close reconciliation of computation and storage should significantly reduce the energy-consuming process of data movement. This paper proposes a Computational SRAM (CSRAM) combining In- and Near-Memory Computing (IMC/NMC) approaches to be used by a scalar processor as an energy-efficient vector processing unit. Parallel computing is thus performed on vectorized integer data on large words using usual logic and arithmetic operators. Furthermore, multiple rows can be advantageously activated simultaneously to increase this parallelism. The proposed C-SRAM is designed with a two-port pushed-rule foundry bitcell, available in most existing design platforms, and an adjustable form factor to facilitate physical implementation in a SoC. The 4kB C-SRAM testchip of 128-bit words manufactured in 22nm FD-SOI process technology displays a sub-array efficiency of 72% as well as an additional computing area of less than 5%. The measurements averaged on 10 dies at 0.85V and 1GHz demonstrate an energy efficiency per unit area of 35.6 and 1.48TOPS/W/mm2 for 8-bit additions and multiplications with 3ns and 24ns computing latency, respectively. Compared to a 128-bit SIMD processor architecture, up to 2x energy reduction and 1.8x speed-up gains are achievable for a representative set of highly data-centric application kernels.